Roboscope. Part I

Advanced spatial computing platform

From HoloLens to Mobile

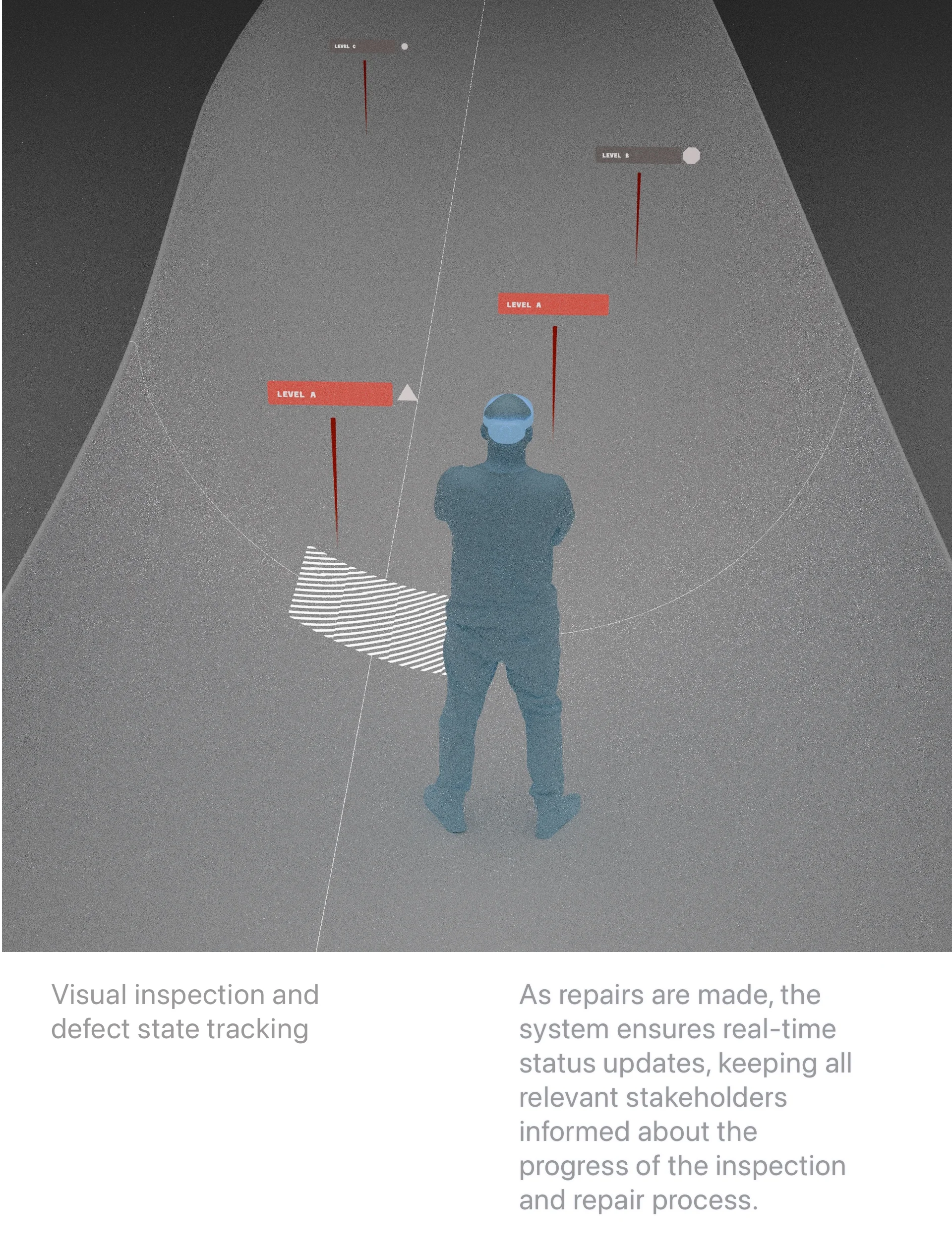

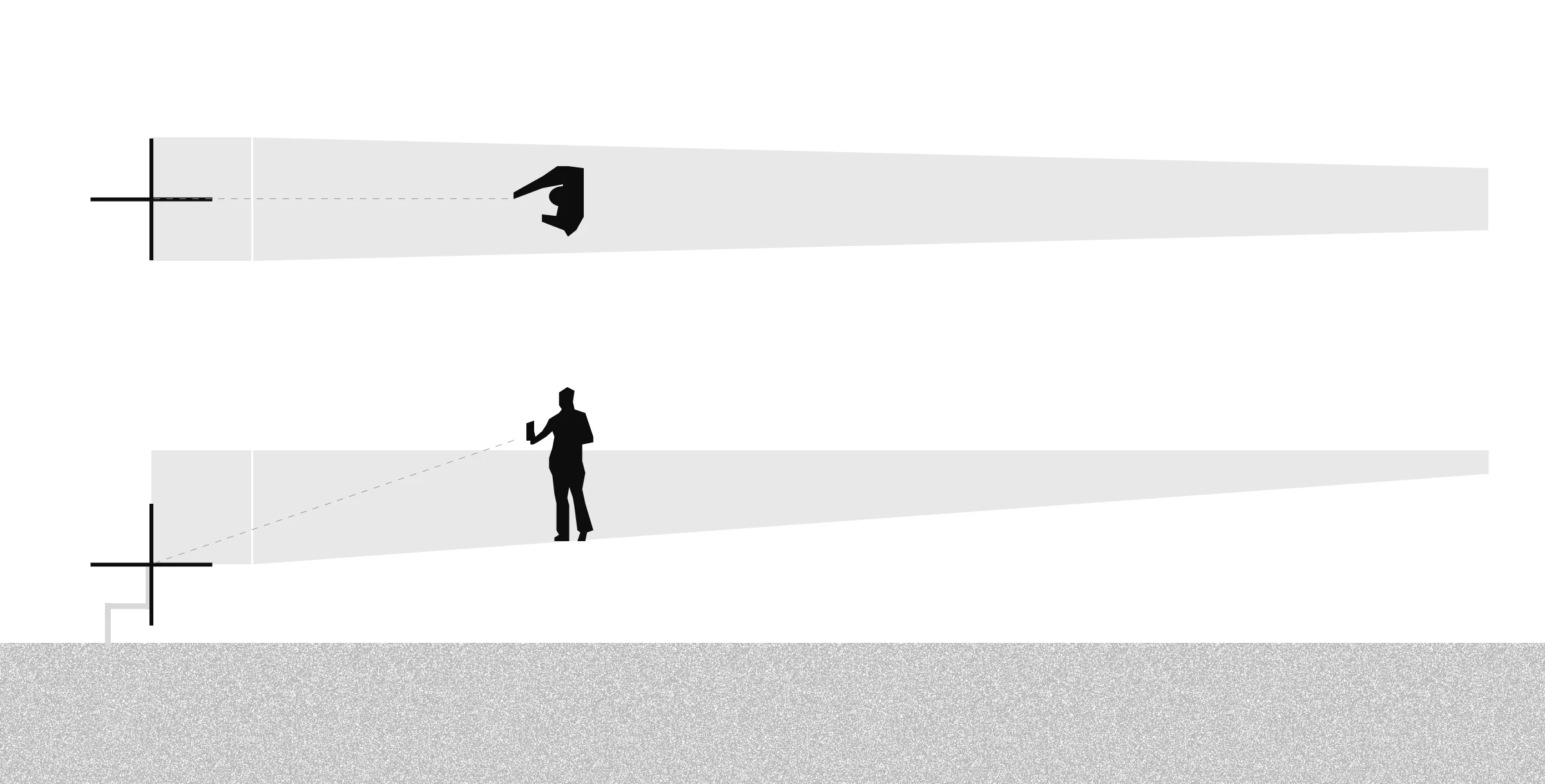

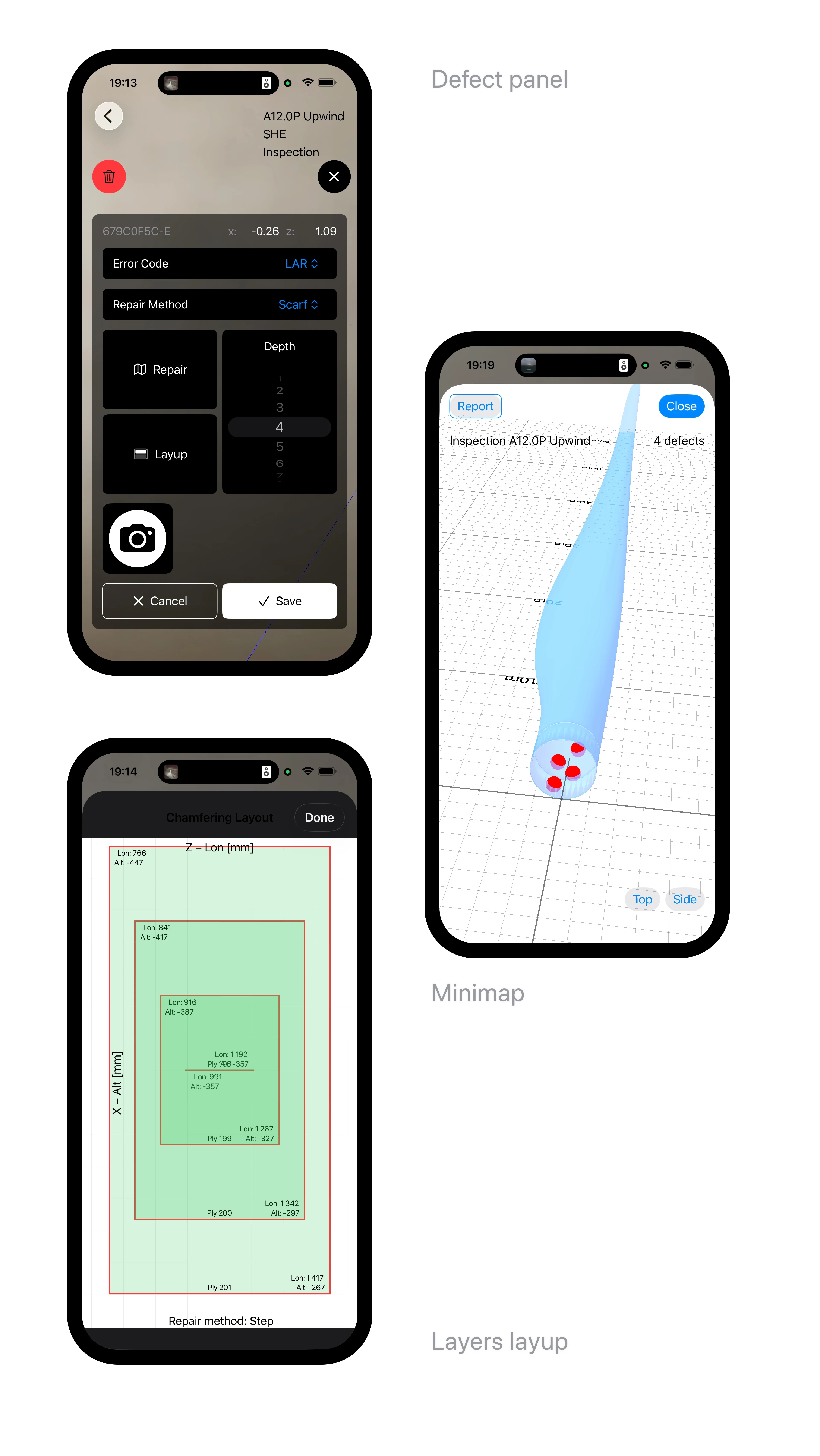

Marking a defect typically takes at least five measurements (sometimes almost twice as many): to locate the defect, capture its dimensions, and measure all distances along curved surfaces in a crowded, noisy environment, on blades up to a hundred meters long. On top of that, handwritten forms still have to be typed into the ERP system later.

Edge Cases and Integration

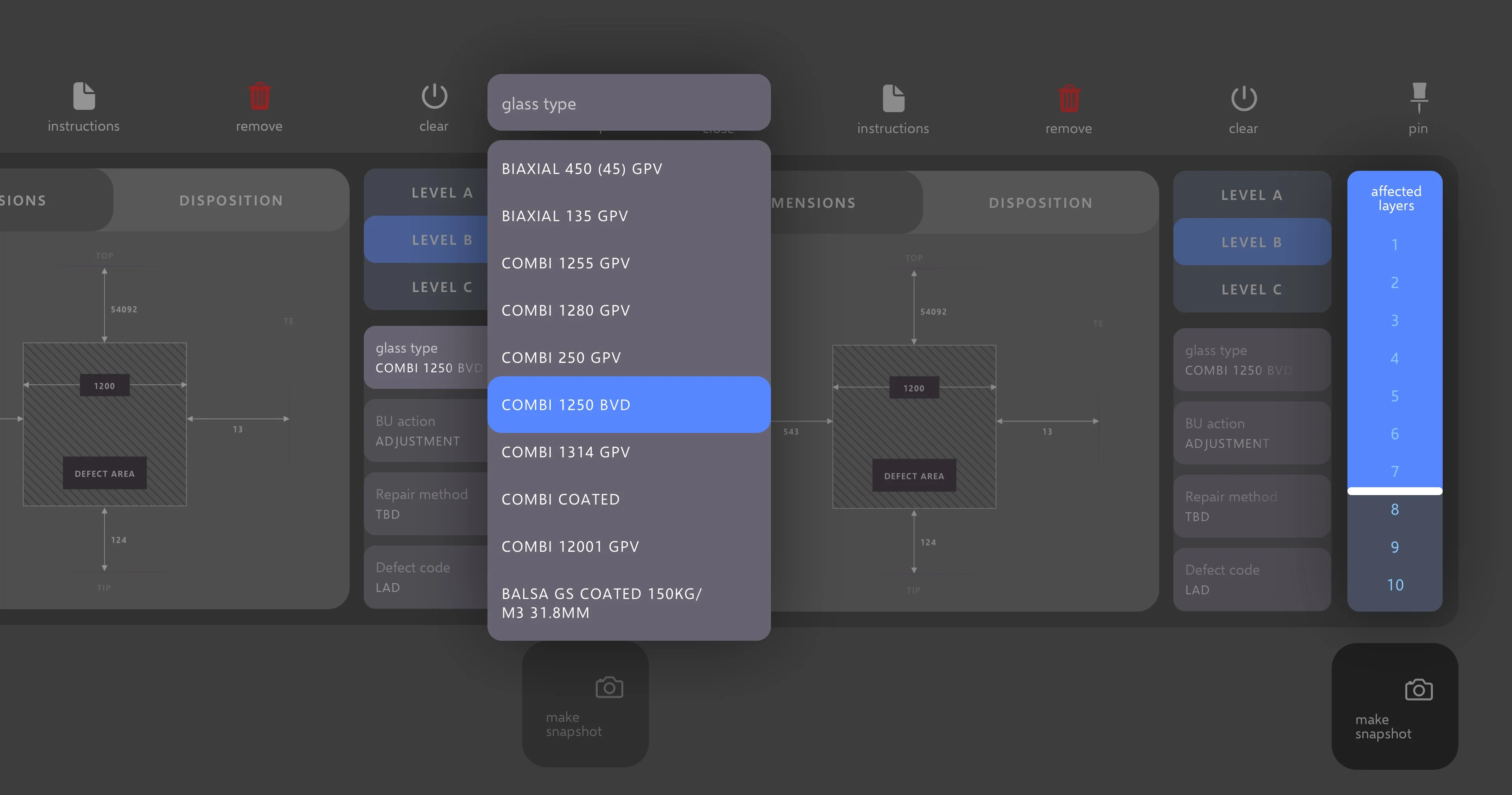

Edge cases were another major challenge — sometimes quite literally at the blade’s edge. When a technician on the floor can’t decide how to repair a defect, they take several photos and send them to an engineer, who replies with a solution. Even though this flow is supported by an internal QC system, it still involves a lot of manual work: composing emails, logging into systems, checking for replies, and constantly switching tools. These cases are rare but they always block production and are expensive. Having all defect registration tools and QC system integration in one place felt obvious, but took a long time to realize.

As a wearable solution, Spector also freed up the technician's hands. Despite the clumsy hardware and the discomfort it caused, it was still genuinely useful — especially in an environment where helmets and safety glasses are required anyway.

But HoloLens never really took off. Microsoft shut the line down just as we completed the pilot phase. By the end of 2024, after five years of work, hundreds of thousands of dollars invested, and countless battles with big-company bureaucracy, it felt like we were left with nothing.

Almost

The Swift Switch

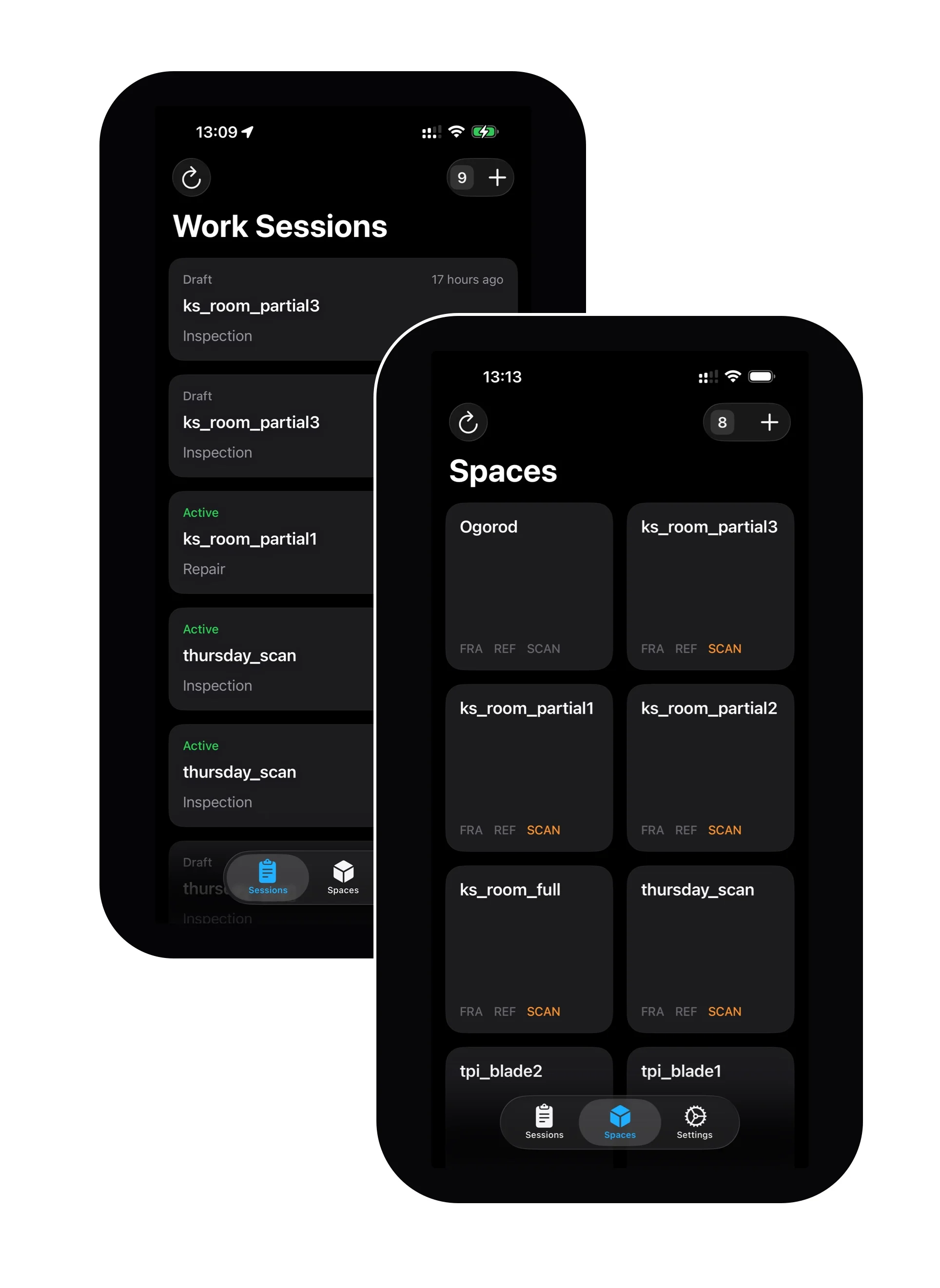

Overnight, we switched to mobile. Fortunately, our contract already allowed for mobile devices, and that's how Roboscope was born.

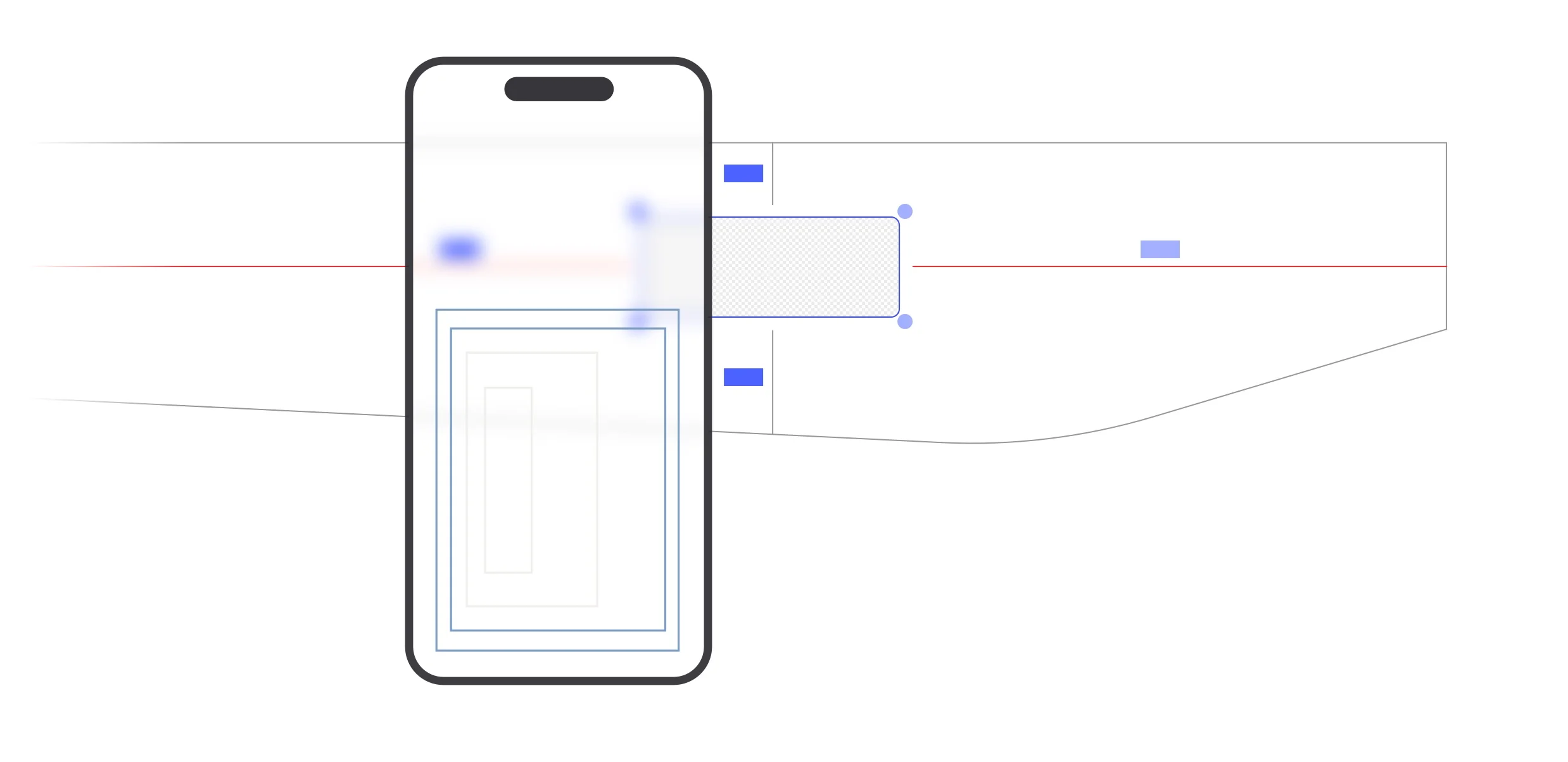

The switch itself was fast — by then we had rebuilt Spector from scratch more than once. What worried us was iPhone usability on the floor, where at least one hand is always occupied. We also didn’t know how precise phone-based AR would be, even though for our use case ~5 cm accuracy is more than enough.

It turned out the iPhone app performed well: we refined spatial marker interactions, achieved sufficient accuracy, and, most importantly, removed the steep learning curve that had haunted HoloLens. It was a real relief.

New Beginnings

Within two months we had several floor tests scheduled, and the project moved into its final stage — terms, pricing, device choices, and integration (which was already about 80% complete). Then, in summer 2025, bad news: our client was being broken up. The company would cease to exist within a few months.

That could have been the end of the story. It wasn't.

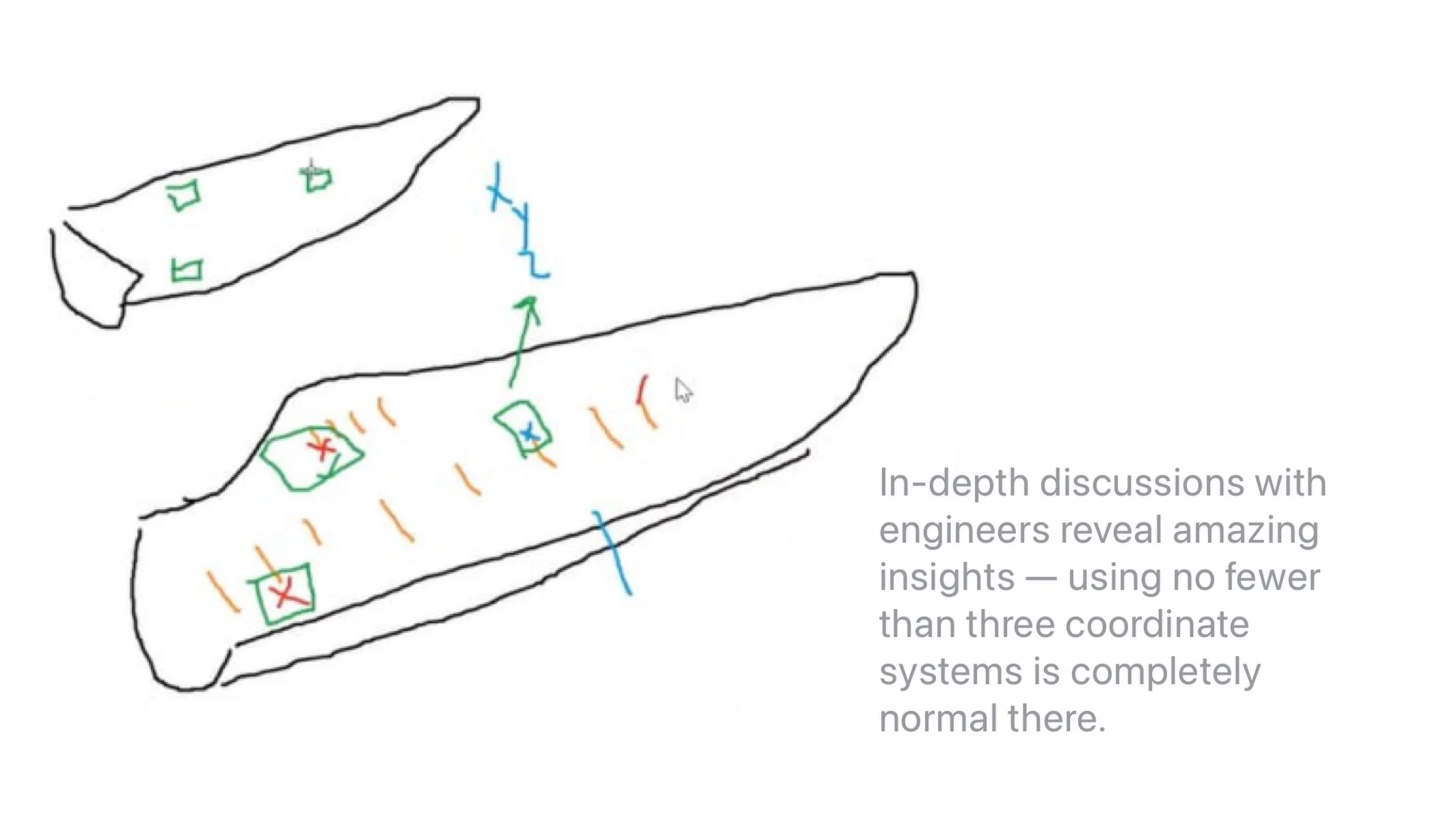

There are many wind blade manufacturers worldwide, and we also started exploring adjacent industries like construction and shipping. After talking with engineers there, we discovered very similar spatial marking challenges. We gathered all of this, went to market, launched the landing page, ran an email campaign, and reached out to every contact we had.

It worked

In September 2025 we ran our first tests with a new potential client — again a blade manufacturer, who had heard about our solution from a competitor years before.

Current Challenges

Over the following months, we completed a series of tests that validated the solution's reliability. At the same time, we discovered a consistent 1% drift in the iPhone AR coordinate system — almost a meter of error at the tip of the longest blade. So now (literally) we're working on this issue, exploring several approaches:

- Recalibration (not ideal)

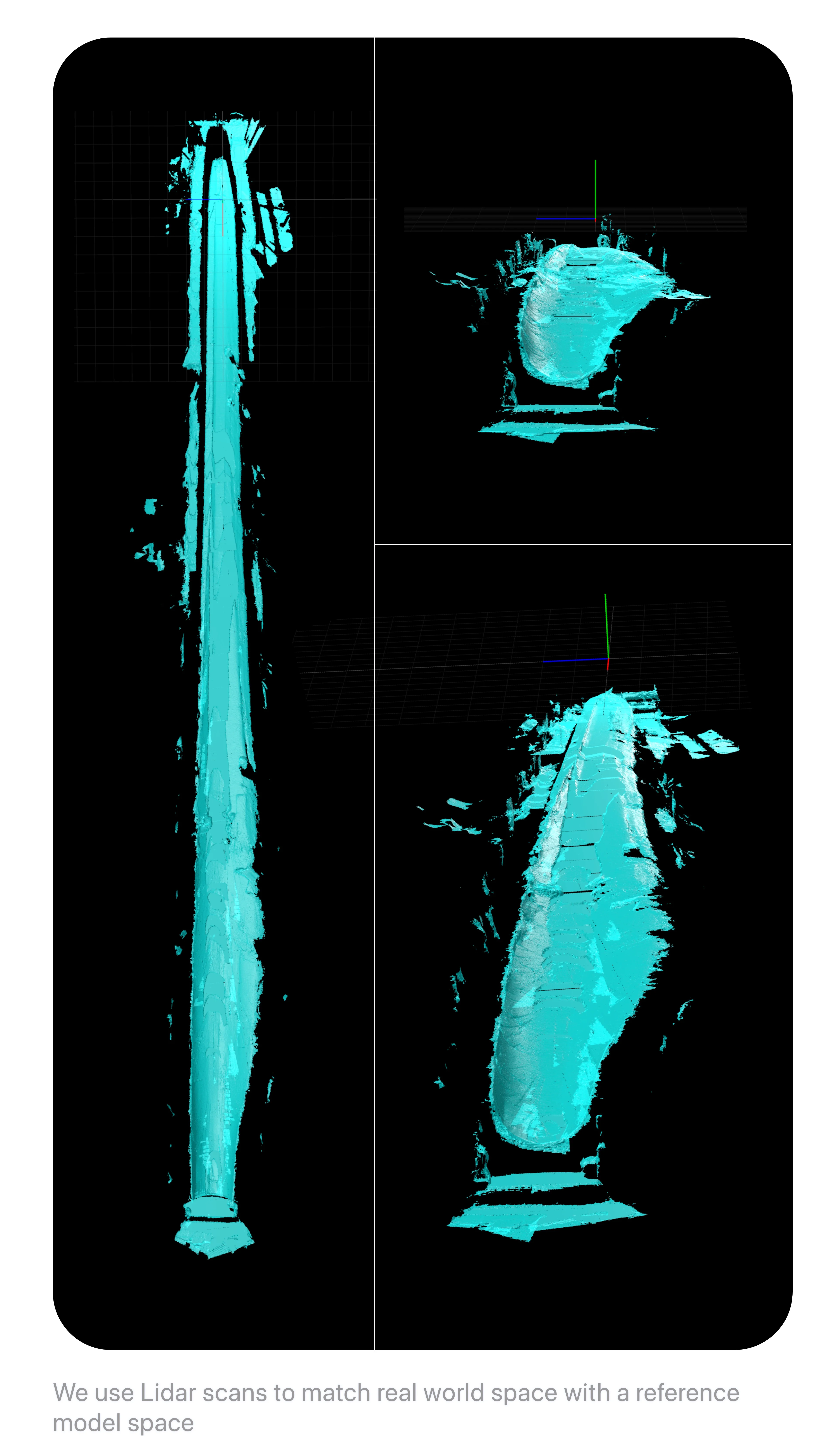

- Creating a fresh coordinate system for each marker based on the scanned environment (promising but not yet finished)

- Using real-world anchors such as the laser marks already present on the factory floor (this most reliable solution but requires significant involvement in process of the engineering stuff)

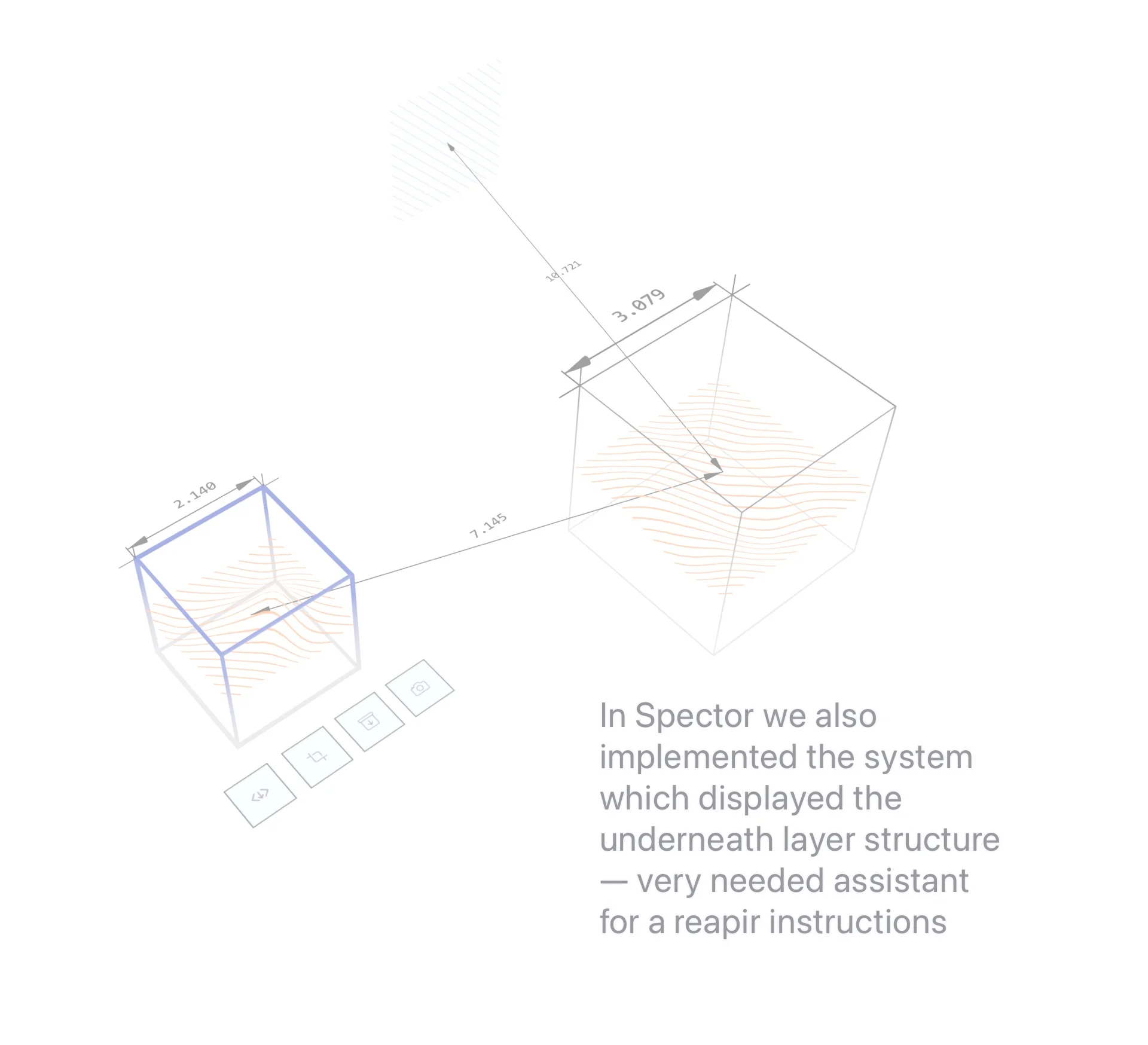

And one mote thing. Over the last two years, we're incorporating ML vision into the platform, both in the client app and on the backend. We trained models for various cases: defect types, defect structure, paintwork issues, and circularity issues (how much a real object's curve differs from a circle). All of this still needs adjustments—and, first of all, more data, obviously—but visual detection should be a significant part of the solution and help complete a full working cycle.